Major platforms are not labeling AI-generated videos

A Washington Post exposé

The headline from this week’s Washington Post is upsetting if hardly a surprise (this is a gift link to the article):

“We uploaded a fake video to 8 social apps. Only one told users it wasn’t real.”

The subhead: “Facebook, TikTok and other major platforms do not use a tech industry standard touted as a way to flag fake content, tests using AI-generated videos found.”

The article, written by Kevin Schaul and published six days ago, is the first that I have seen which looks behind the promises of tech companies to inform the reader if visual media is AI-generated. It begins:

“As new artificial intelligence tools generated a cascade of increasingly realistic fake videos and images online, tech companies developed a plan to prevent mass confusion.

“Companies such as ChatGPT maker OpenAI pledged to imbue each fake video with a tamperproof marker to signal it was AI-generated. Social media platforms including Facebook and TikTok said they would make those markers visible to users, allowing viewers to know when content was fake and experts to trace its origins.

“The system was supposed to provide a digital ground-truth to help guard against deepfakes disrupting elections or inciting turmoil. Tech companies made it part of their response to government regulators asking for safeguards on AI’s power.

“But tests by The Washington Post on eight major social platforms found that only one of them added a warning to an uploaded AI video. That disclosure, by Google’s YouTube, was hidden from view inside a description attached to the clip.”

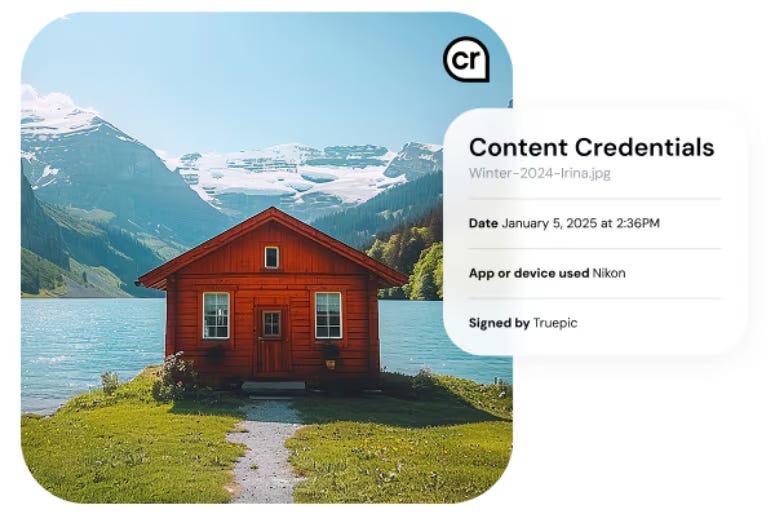

The testing included a search for Content Credentials, an industry-wide standard pioneered in 2021 by companies including Adobe, Microsoft and the BBC. But there were problems: “No platform that The Post tested kept the Content Credentials data on the video or let users access it. Only YouTube displayed any indication that the video was not real, but the platform’s disclosure was added to the description attached to a video, which is only visible to users who click through. It described the clip as ‘Altered or synthetic content’ and did not mention AI.”

Participation in the Content Credentials initiative is voluntary, Schaul explains, and “if social media platforms don't implement the standard, it is effectively useless.”

And as one researcher, Arosha Bandara put it: “‘The potential dystopia is that all content, all video, photography, has to be mistrusted until it can be positively authenticated as being real.’”

Are we surprised? Multiple strategies will have to be implemented with strenuous support from the communities that require credible visual media, including journalism, law, forensics, education, archives, and, of course, government. And there must be penalties for abusing the viewer’s trust.

Forthcoming:

I’ll be giving a series of lectures and teaching a Masterclass on image-making and social justice in collaboration with StrudelmediaLive.

It will begin with a three-part lecture series open to all with proceeds ($10 suggested donation) going to the Committee to Protect Journalists. It will be followed by two five-week classes offering an opportunity for image-makers from around the world to work in a small-group setting. Admission is by application. Limited scholarships are also available.

The lecture series, Toward Authentic Visual Narratives in an Age of Menace and Confusion, will cover: Images and Ideas: An Introduction, Image‑based Strategies for Social Change, and Impactful Imagery in the Age of AI. Following each lecture, breakout rooms with moderators will be available for those who want to pursue the discussion further.

Please check the StrudelmediaLive website for more information.

Please consider subscribing and, if at all possible, as a paid subscriber. It would be very much appreciated.